One of the most important theoretical results in linear programming is that every LP has a corresponding dual program. Where, exactly, this dual comes from can often seem mysterious. Several years ago I answered a question on a couple of Stack Exchange sites giving an intuitive explanation for where the dual comes from. Those posts seem to have been appreciated, so I thought I would reproduce my answer here.

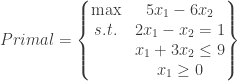

Suppose we have a primal problem as follows.

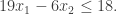

Now, suppose we want to use the primal’s constraints as a way to find an upper bound on the optimal value of the primal. If we multiply the first constraint by 9, the second constraint by 1, and add them together, we get  for the left-hand side and

for the left-hand side and  for the right-hand side. Since the first constraint is an equality and the second is an inequality, this implies

for the right-hand side. Since the first constraint is an equality and the second is an inequality, this implies

But since  , it’s also true that

, it’s also true that  , and so

, and so

Therefore, 18 is an upper-bound on the optimal value of the primal problem.

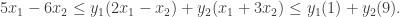

Surely we can do better than that, though. Instead of just guessing 9 and 1 as the multipliers, let’s let them be variables. Thus we’re looking for multipliers  and

and  to force

to force

Now, in order for this pair of inequalities to hold, what has to be true about  and

and  ? Let’s take the two inequalities one at a time.

? Let’s take the two inequalities one at a time.

The first inequality:

We have to track the coefficients of the  and

and  variables separately. First, we need the total

variables separately. First, we need the total  coefficient on the right-hand side to be at least 5. Getting exactly 5 would be great, but since

coefficient on the right-hand side to be at least 5. Getting exactly 5 would be great, but since  , anything larger than 5 would also satisfy the inequality for

, anything larger than 5 would also satisfy the inequality for  . Mathematically speaking, this means that we need

. Mathematically speaking, this means that we need  .

.

On the other hand, to ensure the inequality for the  variable we need the total

variable we need the total  coefficient on the right-hand side to be exactly

coefficient on the right-hand side to be exactly  . Since

. Since  could be positive, we can’t go lower than

could be positive, we can’t go lower than  , and since

, and since  could be negative, we can’t go higher than

could be negative, we can’t go higher than  (as the negative value for

(as the negative value for  would flip the direction of the inequality). So for the first inequality to work for the

would flip the direction of the inequality). So for the first inequality to work for the  variable, we’ve got to have

variable, we’ve got to have  .

.

The second inequality:

Here we have to track the  and

and  variables separately. The

variables separately. The  variable comes from the first constraint, which is an equality constraint. It doesn’t matter if

variable comes from the first constraint, which is an equality constraint. It doesn’t matter if  is positive or negative, the equality constraint still holds. Thus

is positive or negative, the equality constraint still holds. Thus  is unrestricted in sign. However, the

is unrestricted in sign. However, the  variable comes from the second constraint, which is a less-than-or-equal to constraint. If we were to multiply the second constraint by a negative number that would flip its direction and change it to a greater-than-or-equal constraint. To keep with our goal of upper-bounding the primal objective, we can’t let that happen. So the

variable comes from the second constraint, which is a less-than-or-equal to constraint. If we were to multiply the second constraint by a negative number that would flip its direction and change it to a greater-than-or-equal constraint. To keep with our goal of upper-bounding the primal objective, we can’t let that happen. So the  variable can’t be negative. Thus we must have

variable can’t be negative. Thus we must have  .

.

Finally, we want to make the right-hand side of the second inequality as small as possible, as we want the tightest upper-bound possible on the primal objective. So we want to minimize  .

.

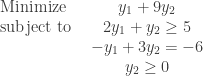

Putting all of these restrictions on  and

and  together we find that the problem of using the primal’s constraints to find the best upper bound on the optimal primal objective entails solving the following linear program:

together we find that the problem of using the primal’s constraints to find the best upper bound on the optimal primal objective entails solving the following linear program:

And that’s the dual.

It’s probably worth summarizing the implications of this argument for all possible forms of the primal and dual. The following table is taken from p. 214 of Introduction to Operations Research, 8th edition, by Hillier and Lieberman. They refer to this as the SOB method, where SOB stands for Sensible, Odd, or Bizarre, depending on how likely one would find that particular constraint or variable restriction in a maximization or minimization problem.

Primal Problem Dual Problem

(or Dual Problem) (or Primal Problem)

Maximization Minimization

Sensible <= constraint paired with nonnegative variable

Odd = constraint paired with unconstrained variable

Bizarre >= constraint paired with nonpositive variable

Sensible nonnegative variable paired with >= constraint

Odd unconstrained variable paired with = constraint

Bizarre nonpositive variable paired with <= constraint

, is

, as the vehicle will need to work fine for the first

days (with probability

for each day) and then break or crash on day k, with probability p. The probability that a vehicle will need to be replaced after n days is

, as this is the probability that the vehicle will work fine for

days. (It doesn’t matter whether it breaks on day n or not; it will still be replaced after day n.) Letting

, the expected amount of time that a vehicle is in operation is thus (using the definition of expected value) given by

as

and then swapping the order of summation. In the second line we use the geometric sum formula, and in the third line we use the quotient rule for derivatives.